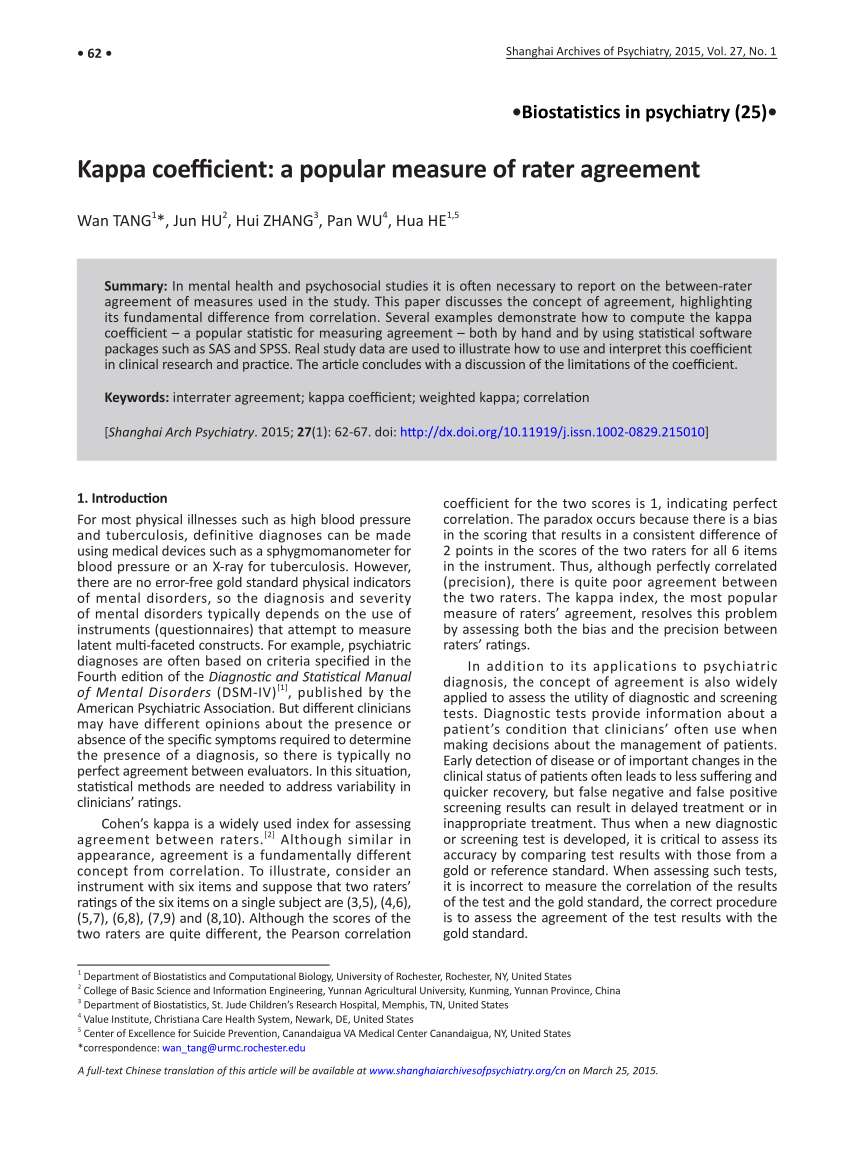

Inter-Annotator Agreement (IAA). Pair-wise Cohen kappa and group Fleiss'… | by Louis de Bruijn | Towards Data Science

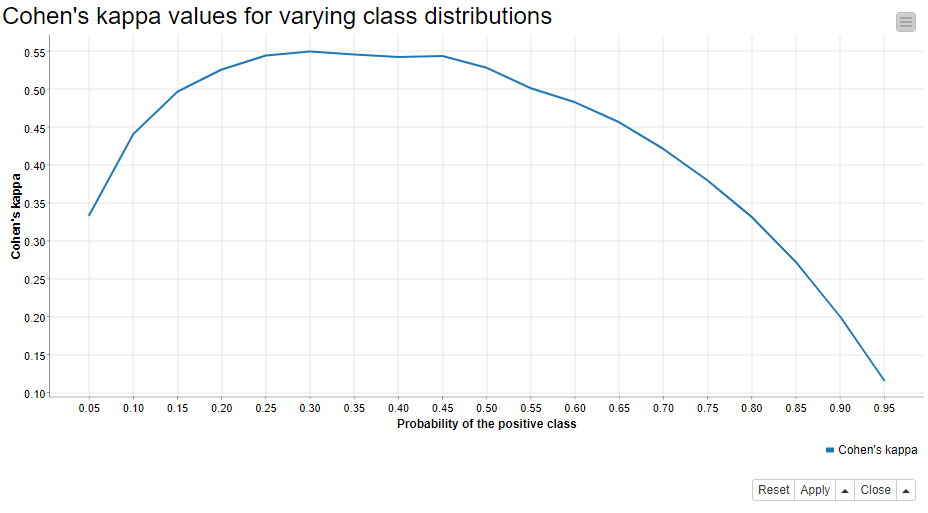

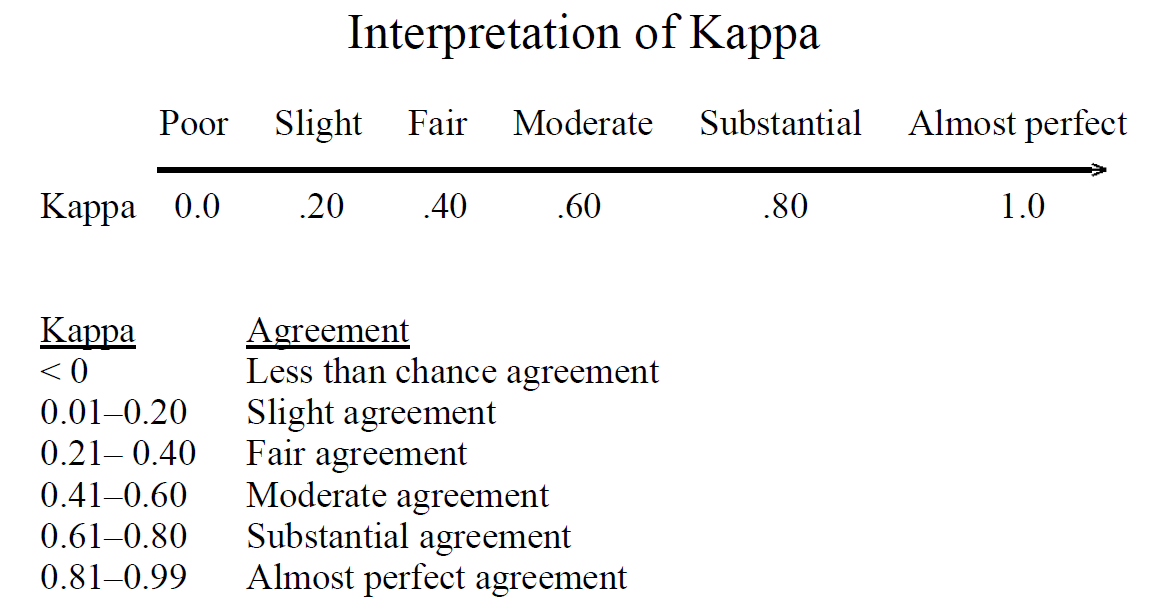

Explaining the unsuitability of the kappa coefficient in the assessment and comparison of the accuracy of thematic maps obtained by image classification - ScienceDirect

The kappa coefficient of agreement. This equation measures the fraction... | Download Scientific Diagram

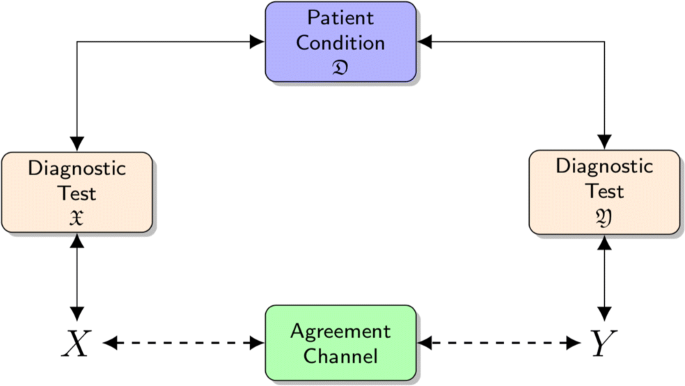

Beyond kappa: an informational index for diagnostic agreement in dichotomous and multivalue ordered-categorical ratings | SpringerLink